Today we’re going to talk about managing back-end long-running asynchronous tasks in Angular. This term may seem long and scary to you, but don’t freak out. After reading this article you’re going to be familiar with this concept and even be able to handle this use case in your own projects.

Why Angular?

At Inero Software we are using Angular at the front-end side of most of our projects and that is not without a reason.

- It is suitable for big software systems because of its component-based architecture which provides a higher quality of code, better maintainability, and code reusability.

- Native Typescript support, Typescript means Types and that means safer code.

- Angular, unlike other frameworks, is fully stacked with predefined solutions to problems you may approach, you don’t have to think about which library to use for every single implementation case whether it be routing, reactive programming, or making Http requests.

What’s a back-end long-running asynchronous task?

The easiest way to understand what’s a back-end long-running asynchronous task is to explain it with an example of our product which possesses this kind of task.

The easiest way to understand what’s a back-end long-running asynchronous task is to explain it with an example of our product which possesses this kind of task.

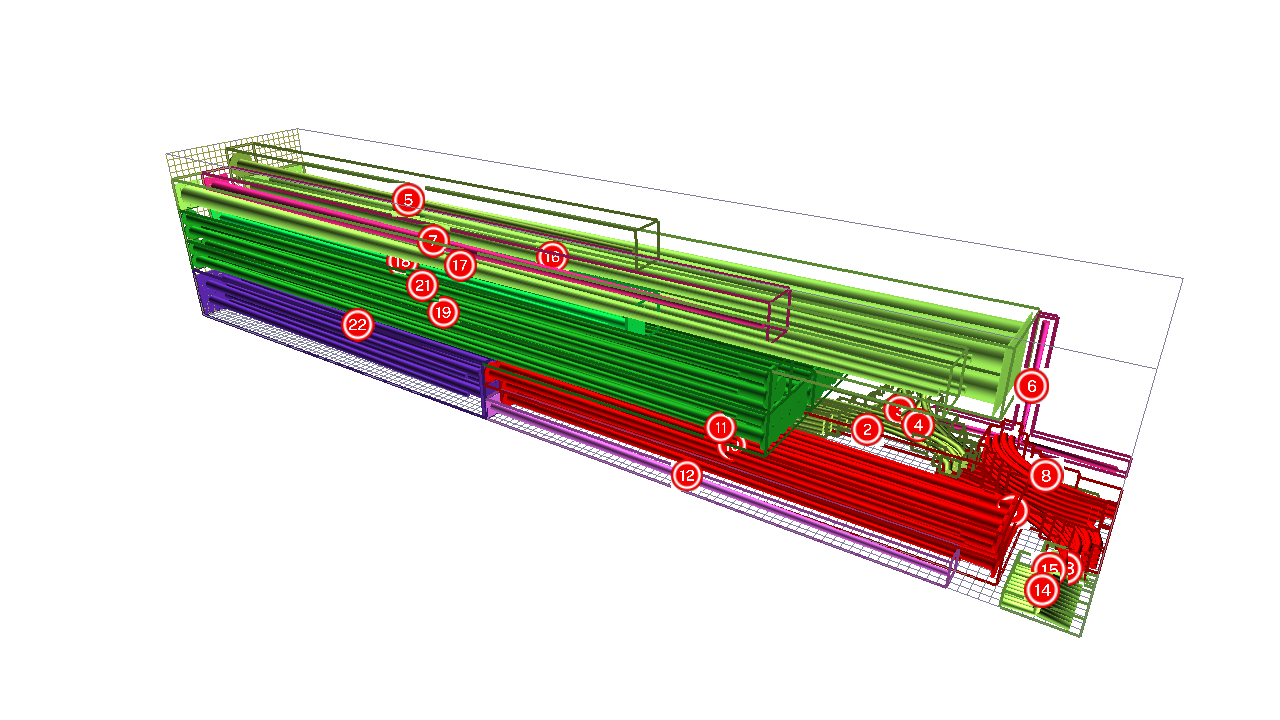

At Inero Software we are developing a product called DeliverM8 which is a delivery optimization platform. It has many functionalities but let’s focus on the important one for today’s article. Imagine that you run a company that is delivering products all around the country. You need a system that would display to you how many products and which products to put onto a truck and also how should they be ordered so that everything fits nicely.

Input requires the following data:

- How many products should be delivered?

- What are their sizes?

- Where do they go? (so we know which products should be on the beginning or end of the truck etc.)

The output is a 3d visualization which looks like this:

As you may imagine – it does take time for a backend service to create output like this, the AI that stands behind DeliverM8 logic runs millions of operations per second in order to find the best optimal available element arrangement. This is the back-end long-running asynchronous task. Depending on the input this may take up to 10 minutes and of course freezing of the frontend application until the data is retrieved is the last thing that we need, so what should we do?

Approaches

A DISCLAIMER: All examples are made with Angular, but the concepts mentioned below can easily be applied to any other frameworks/languages.

Synchronous

Probably the worst option out there but should be mentioned nevertheless. If in fact, the user shouldn’t be using the application, while he is waiting for the response, then you can just put some kind of modal loading screen while the user awaits the data and the problem is solved.

How:

You send a single POST request that tells the backend to start the calculations and return the data within the response of the beforementioned request.

Example:

public loadTrucks(loadTruckData): Observable<Route[]> {

return this.http.post<Route[]>('/api/load-trucks', loadTruckData);

}

Polling

Now polling is a slightly better option if the user wants to use the application while the data is loading and you don’t have that much time to invest in the solution and/or your backend is not providing you with WebSockets then polling is the way to go.

Polling means checking the status of (a backend endpoint in this case ), especially as part of a repeated cycle. Translating it to front-end language means making a get request every few seconds to see if the asynchronous task is finished. For backend that would mean creating a new GET endpoint that would return the calculated data. (if in fact it has been calculated before)

Not the most elegant solution out there but still pretty usable!

How:

We make one POST request that informs the backend to start the calculations and one more GET request to get the output data.

One of the ways of polling is combining RxJs’s timer with switchMap. Also remember to unsubscribe, because all sorts of intervals can easily produce memory leaks, in this example, we are using takeUntil operator that unsubscribes from the observable when it’s component is destroyed.

Example:

timer(0, 5000).pipe( switchMap(() => this.truckService.getLoadedTrucks()), takeUntil(this.stopPolling) )

Websockets

Websocket is a communication protocol (just like HTTP) “for a persistent, bi-directional, full duplex TCP connection from a user’s web browser to a server”. Basically what it means is that with WebSockets you can send out the data from the server and receive it in your frontend application without making any unnecessary requests (like you would using polling).

With the Websocket approach, you would send an HTTP POST request to start calculating the truckload. And at the same time, you would establish a WebSocket connection between the Frontend application and the server so that when the backend tasks finish the output is sent through that WebSocket connection.

How:

Send HTTP POST request that informs the backend to start the calculations and listen on Websocket connection with a backend that returns data when the calculations finish

Example:

At first, you connect to a WebSocket:

public connect() {

const socket = new WebSocket(this.webSocketUri);

this.stompClient = Stomp.over(socket);

this.stompClient.connect({},

() => this.subscribe()

}

And later on, you subscribe to its values:

this.stompClient.subscribe('/user/queue/truck-load-updates-queue', (msg: Stomp.Frame) => {

const msgBody = JSON.parse(msg.body);

...

});

The subscription above is triggered after the calculation is done.

Conclusion

That’s it!

I’ve described to you the problem of back-end long-running asynchronous tasks and various ways of handling them in the frontend application.

Websockets are the best way to go if you are struggling with this problem, but if the user doesn’t mind getting his application blocked (or maybe it is intended to block the app for the time being) for the time when the backend is performing its tasks then you can simply go with KISS principle and just make one synchronous request.

Polling is also an option if the developers don’t feel like implementing WebSockets and additional network movement is of no concern.

Thanks for reading! And I hope you liked the article 🙂