The power of prediction by the numbers

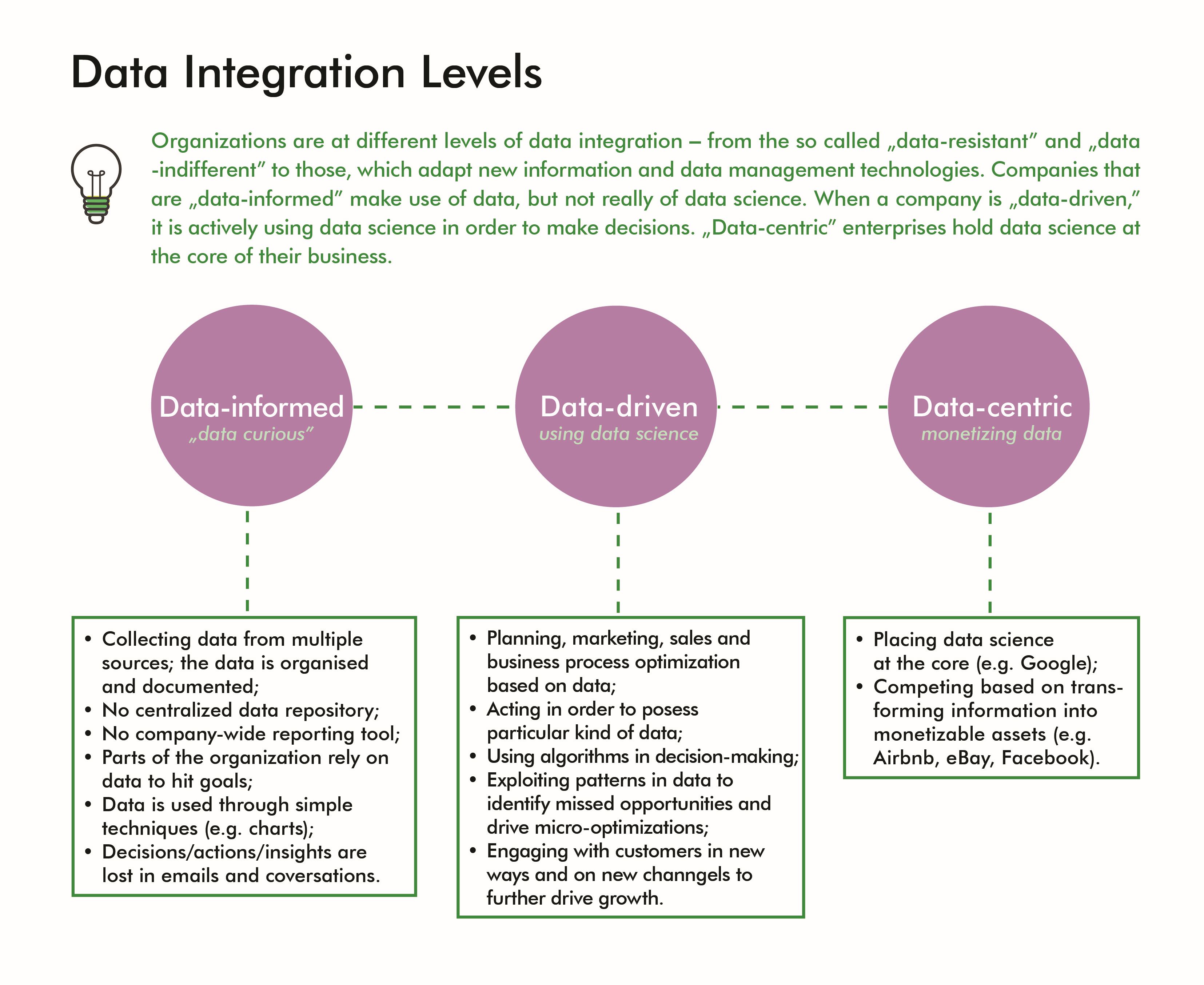

Between 2005 and 2010 the computerization of processes began for good, turning many practices and tasks in the professional world from their analogue form to digital. Since then, one of the common aims of enterprises operating in the digital transformation era has often been phrased as “becoming a data-driven company,” i.e. to rely on hard data, while taking business decisions, rather than on intuition and observations alone. Better information management capabilities often translate to adding volume and growth, reducing costs, improving performance and product innovation – to name a few.

Increasingly better data processing tools have been developed over the past decade. As Peter Sondergaard famously said, “Information is the oil of the 21st Century, and analytics is the combustion engine.” Moreover, the prices for storing data continuously fall. And since more and more information continues to flood businesses – coming from simple sensors that measure temperature, telemetry, more advanced devices that analyze the condition of equipment and locate it in buildings, to phones with GPS and e-mails – analysts and managers gain increasingly more opportunities to use it for the purpose of business development.

How can we help you?

Let’s see this through the perspective of one of our prospective clients, a company that rents construction equipment, such as excavators, cranes, and trucks. Its management wants to improve and automate the quoting process or at least to enable making pricing decisions semi-automatically for the team of twenty sales representatives.

In order to answer a simple question: “How much will it cost to rent a piece of equipment for a specified period of time?” a number of factors must be taken into account. In addition to technical data, such as: the timing, location, travel duration and mileage, as well as load, combustion, etc., there are various other elements, such as insurance conditions or proceedings in case of damage, making it a fairly tricky calculation. In cases of increased risk – as with companies, which habitually return the equipment damaged – the managers may have to offer a higher price. On the other hand, the clients who always return equipment in pristine condition and pay on time should enjoy more favorable pricing. Moreover, some offers may seem beneficial, but the company might not profit from them due to one or two hidden factors.

With several dozens of such quote requests per day, and each bid being affected by a dozen or so factors, it’s ineffective for each salesman to prepare such a quote based solely on his/her calculations, past experience and intuition. In other words, the quoting process is too complex to be efficiently interpreted with human mental capabilities solely. It is, however, cut out for an approach known as data-driven (or data-directed) decision making – DDDM.

Base your actions upon mathematical reason thanks to data

Data-driven decision making in its core means that the basis for decisions should be researched and concluded from key data sets that show their projected value and how they might perform. Thanks to machine learning (ML) methods, which are used to collect and process data, we can not only verify which data elements really affect whether an offer is beneficial to our bottom line or not, but also validate our decisions before making them, avoid bias by making decisions based on huge amounts of current, real-time data; and diversify. You can dig deeper into the insights and establish additional sales opportunities, and identify underperforming areas that affect the overall sales of products. In addition to increasing efficiency, this approach can potentially teach us things that we’ve been misinterpreting for decades.

Data-driven decision making is being used in the fields of academia, business, and government to measure things in fine detail, as they occur. As a business technology, it has advanced exponentially in recent years, becoming ever more fundamental in various industries, including fields like medicine, transportation and equipment manufacturing.

State your goals, gather the proper data, structure the data

The key issue to remember while working with big data is that to extract genuine value from the data at your disposal, it must be relevant to your aims, which, in turn, should be defined prior to such analysis. If your data is incorrect, you’re going to be seeing a distorted view of reality.

Once the right questions are asked and business goals set, we approach the work with big data by structuring them. In order to ensure data quality, we categorize, organize and catalog data across different tables, removing or correcting data that is incomplete, or irrelevant. This is also an appropriate time to perform data targeting and adding more data elements to better describe phenomena, and find common patterns among the datasets. This is typically a moment when companies decide to use the services of an IT company, which can help in this process.

While preparing raw data for analysis, it’s important to remember, that various sets of data are interpreted differently (like information from underwater versus above water devices, etc.), moreover, different interpretation is required to process information from external sources or other IT systems that we want to integrate. The collection and structuring of data for the purposes of training Artificial Neural Network (ANN) – an ML model, which learns to perform tasks by considering examples – is already a big step that can illuminate certain things for us.

When data is prepared in such a way so that a neural network can learn, we use historical data, take into account the specificity of this data and the company’s operating model. This is an iterative process that we carry out many times to include all the necessary elements of the process, so that it brings the greatest value. Some solutions will appear only along the way.

Perform analytics-based sense making

Once we built accurate easily-transformed data sets, and measured it with statistical tools, we begin to analyze the information in order to answer the business questions identified earlier in the process. The insights – deep and intuitive understanding of phenomena – emerge not by mechanically applying analytical tools to data, but rather via an active process of engagement between data analysts and business managers. The uncovered knowledge can define the company’s development strategy, which generates value.

The creation of a proof of concept (PoC) with the use of innovative machine learning solutions requires competence in different areas, like data integration, understanding mathematics, SQL, and business processes. It requires many conversations and meetings, but above all – commitment from all parties involved. On the whole, this process takes many weeks; based on our experience – on average 3-6 months from the start to implementation. As with most investments, for several weeks there are no effects from the point of view of our customers, except for costs. Nonetheless, the concluding element of the process is the implementation of a final solution to the operational activity of the enterprise, or in other words – shedding light on the business questions, which made us embark on this quest in the first place.

The cost of building a PoC is roughly an equivalent to several dozen hours of developer’s work or to the cost of an advertising stand at international trade fairs (5,000-10,000 EUR). It’s not an exceptionally high cost compared to the benefits it can bring. At Inero Software we do not only design prototypes; but also test, improve and wrap the solutions with the graphical user interface (GUI), upon consulting it directly with end users.

Calibrating to Industry 4.0

Markets and environments constantly change. It’s important to remember that for continued relevance in a changing landscape we can never be over-reliant on past experiences. And that even though future unfolds in front of our eyes, with the use of everyday analytics some of its aspects are within our grasp before they physically manifest.

Inero Software provides knowledge and expertise on how to successfully use cutting edge technologies and data to shape corporate digital products of the future.

In the blog post section you will find other articles about IT systems and more!

#DDDM, #DataDrivenDecisionMaking, #DataDrivenDecisionManagement, #MachineLearning, #ArtificialNeuralNetwork, #ANN, #ProofOfConcept, #MinimumViableProduct, #CustomSoftwareDevelopment