Large language models (LLMs) are increasingly used to analyze and extract insights from extensive documents, including lengthy statistical reports in PDF format. However, not all models perform equally when processing large files, especially those exceeding 50 pages. In this post, we conduct a comparative analysis of three popular LLMs—OpenAI’s GPT based models: 4o-mini and o3-mini, and open-source DeepSeek R1—to evaluate their effectiveness in reading and analyzing statistical data from large PDFs. Our assessment focuses on three key factors: accuracy, response time, and cost estimation for each model.

To ensure a fair comparison, we utilized LiteLLM, a unified API that simplifies multi-model LLM benchmarking. By standardizing interactions across different LLM providers, LiteLLM allowed us to focus on evaluating LLM performance metrics rather than implementation differences.

A Unified API Approach

Comparing open-source and proprietary LLMs from different providers can be challenging due to their varying APIs. To standardize our testing, we utilized LiteLLM, a library that provides a consistent interface for interacting with multiple LLMs. This allowed for easier switching between models and facilitated a more objective AI model comparison. Here is how easy it is to switch models using LiteLLM’s unified API:

import litellm

# To use openai.

response = litellm.completion(model="o3-mini", messages=[{"content": "Hello", "role": "user"}])

# To use deepseek.

response = litellm.completion(model="deepseek/deepseek-reasoner", messages=[{"content": "Hello", "role": "user"}])

This simplified approach helped us compare models without worrying about implementation complexities.

DeepSeek vs. OpenAI – model overview

Before diving into the AI model benchmarking results, let’s define key concepts and introduce the core specifications of the tested models.

One of the most important parameters to consider in LLM benchmarking is the context window—the maximum input size a model can process at once. This is measured in tokens, which represent chunks of text rather than individual words. A larger context window allows the model to handle more extensive documents in a single request, which is particularly important when working with long statistical reports.

The pricing for LLMs is typically based on token usage, which can vary depending on the type of tokens being processed. There are generally three types of tokens involved in LLM pricing:

- Input Tokens: These are the tokens representing the user’s input, such as the text or prompt sent to the model for processing. The cost of input tokens is charged based on the number of tokens provided by the user in each request.

- Cached Input Tokens: Some models offer a caching mechanism, where previously used inputs are stored and reused in subsequent requests, reducing the need for reprocessing. This is often charged at a lower rate than fresh input tokens, as the model does not need to process them again from scratch.

- Output Tokens: These tokens represent the text or response generated by the model. Output tokens are charged based on the amount of text the model generates in response to the user’s input.

The models selected for this comparison are among the latest releases from the past several months. While they differ in pricing and capabilities, we aim to assess whether these differences translate into measurable performance variations. Below is a breakdown of the key characteristics of DeepSeek-R1, OpenAI 4o-mini, and OpenAI o3-mini:

| DeepSeek-R1 | OpenAI 4o-mini | OpenAI o3-mini | |

|---|---|---|---|

| Context Window | 128,000 tokens | 128,000 tokens (with a maximum output of 16,384 tokens) | 200,000 tokens (with a maximum output of 100,000 tokens) |

| Release Date | January 2025 | July 2024 | January 2025 |

| Pricing (per 1 million tokens) | Input: $0.55 Cached input: $0.14 Output: $2.19 | Input: $0.15 Cached input: $0.075 Output: $0.60 | Input: $1.10 Cached input: $0.55 Output: $4.40 |

| Input Formats | Text | Text, Images (including PNG, JPEG, GIF, WEBP) | Text |

| Output Formats | Text | Text | Text |

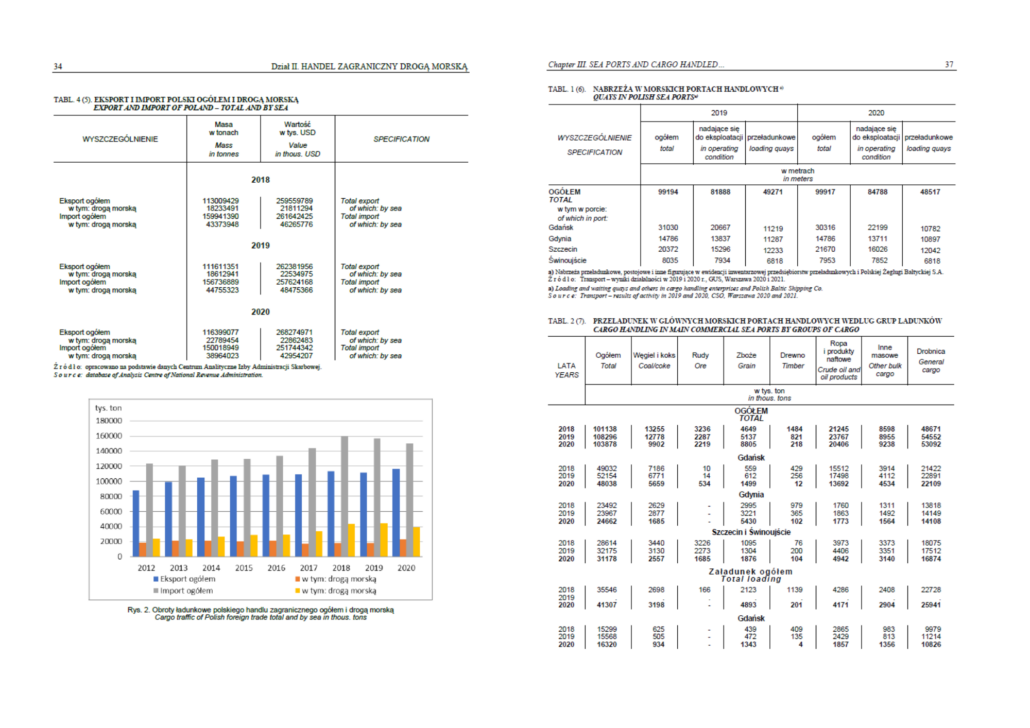

PDF file used for testing

The document used for testing is composed of several chapters of report on the Polish and worldwide maritime economy in 2017-2020. The report is 50 pages long and includes various statistics and analysis of cargo traffic, shipping, shipbuilding, and other maritime industries. The data in the file is formatted in tables and text. Most of the data is presented in tables, with additional explanations and summaries in the surrounding text. Example pages of the document used for testing:

Testing Methodology

We conducted a series of tests using the following maritime economy-themed prompts and a PDF file providing context information. Here are example prompts regarding information included in the PDF:

- Summarize the key economic findings from a maritime report.

- What is the total cargo turnover of Polish sea ports in 2020?

- What are the main cargo types handled by Polish sea ports?

- Which countries are the main trading partners of Poland in seaborne trade?

- What is the average age of ships in the Polish maritime transport fleet?

- What are the key economic indicators for the Polish shipbuilding industry?

As mentioned before, we compared the following models:

- OpenAI’s 4o-mini

- OpenAI’s o3-mini

- DeepSeek’s deepseek-resoner (R1)

We measured the following metrics:

- Inference Time – This refers to the time it takes for the model to generate a response after receiving a prompt. A lower inference time means faster responses, which is crucial for real-time applications and large-scale document processing.

- Token Usage – LLMs process and generate text in units called tokens. A token can be a word, part of a word, or even a punctuation mark. The total token usage includes both input tokens (the user’s query or document) and output tokens (the model’s generated response). The more tokens used, the higher the cost of the request.

- Response Cost – This is calculated as token usage × model pricing (per 1,000 or 1,000,000 tokens, depending on the provider). Since different models have different pricing structures, comparing response costs helps determine which model is more cost-effective for large-scale use cases.

Test Results

Here are the summarized results from our tests (each test was repeated several times):

| Model | Average Inference Time (s) | Average Response Cost ($) | Average Input Tokens | Average Output Tokens |

|---|---|---|---|---|

| DeepSeek R1 | 57.2 | 0.0039 | 63961.7 | 751.6 |

| o3-mini | 13.8 | 0.0755 | 63251.5 | 1162.5 |

| 4o-mini | 9.5 | 0.0511 | 62538.0 | 1046.5 |

Key Observations

- Inference Time: DeepSeek consistently demonstrated longer inference times compared to both OpenAI models. This could be a significant factor for applications that prioritize fast processing.

- Response Cost: DeepSeek showed a competitive advantage in terms of cost, particularly for output tokens. Despite the longer inference time, DeepSeek’s overall cost per request remains lower than OpenAI o3-mini and 4o-mini. The lower response cost of DeepSeek can be attributed to its caching mechanism, which reduces the need to reprocess input data. Most of the input content, particularly the PDF file’s contents, was cached, leading to significant savings in processing costs. This caching system allowed DeepSeek to handle repeated queries more efficiently, making it a cost-effective option for processing large documents.

- Output Variability: The models varied in style and the level of detail in their responses. This is important depending on the context and user requirements (e.g., high-level summaries vs. detailed analysis).

- LiteLLM API: LiteLLM made it extremely easy to track cost, token usage, and response time directly from the API responses, enabling a straightforward comparison between models.

Conclusion

Our tests highlight the advantages of using unified APIs for LLM benchmarking. LiteLLM significantly simplified the process, allowing us to focus on LLM efficiency assessment and evaluating AI language models. While DeepSeek R1 demonstrated competitive cost-effectiveness, particularly due to its caching mechanism, it was by far the slowest model in our tests, with an average inference time of 57.2 seconds. In contrast, OpenAI o3-mini and 4o-mini provided significantly faster response times, making them more suitable for real-time applications.