Implementing an AI-Powered Telephony Service Center with ElevenLabs & LiveAPI

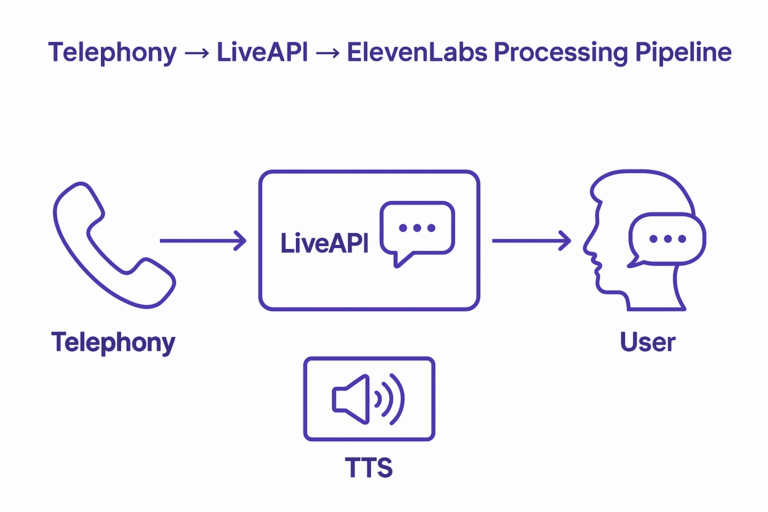

Over the past year, advancements in real-time AI models and high‑fidelity speech synthesis have accelerated the development of AI-driven telephony systems. At Inero, we’ve had the opportunity to integrate modern telephony solutions with LiveAPI technology and ElevenLabs’ voice engine to create a human‑like, responsive, GDPR‑compliant communication experience for a major corporate client.

This article combines two perspectives: a high-level overview of LiveAPI and ElevenLabs technology, and a behind‑the‑scenes look at our practical engineering experience while delivering a real-world AI telephony solution.

1. What Makes LiveAPI and ElevenLabs a Powerful Combination?

LiveAPI solutions such as OpenAI Realtime API and Google Gemini Live API shift the paradigm from static prompts to streaming, interactive communication. These systems support real‑time audio input, low‑latency responses, natural interrupt handling, and multimodal context.

ElevenLabs complements this with industry‑leading voice synthesis. Its realistic, expressive voices and advanced prosody control enable AI agents that sound convincingly human. For telephony environments, this matters — clients expect clarity, confidence, and a pleasant conversational tone.

2. Why GDPR Compliance Shapes the Choice of API in Europe

For European organisations, GDPR compliance is not optional — it defines which AI vendors can be used in production. Although both OpenAI and Google offer real-time APIs, enterprises operating in the EU often restrict use to providers ensuring transparent, EU‑aligned data governance. In practice, this means that Gemini Live API was the viable choice for our implementation, while OpenAI was excluded despite strong technical capabilities.

3. Our Practical Experience Integrating Telephony with LiveAPI and ElevenLabs

Below we outline the key lessons, challenges, and engineering decisions from our implementation.

3.1 Project Context

Our client — a large corporate organisation — required a system capable of handling outbound and inbound calls automatically, while maintaining a tone and responsiveness extremely close to human interaction. The goal was not a simple IVR or menu system, but a natural, fully conversational experience driven by real‑time AI.

3.2 Technology Stack and Constraints

We evaluated both OpenAI and Gemini Live APIs to compare latency, contextual reasoning and streaming quality. However, due to GDPR compliance requirements, the production system was designed around Gemini Live API. ElevenLabs provided the speech synthesis layer, offering high realism and consistent quality across telephony channels.

3.3 Key Engineering Challenges

Beyond typical engineering concerns like audio quality, session stability, and call routing, the most demanding challenge was not purely technical — it was understanding how real users communicate over the phone. Subtle behaviors such as interruptions, hesitation, changing tone, or switching context required careful analysis and extensive testing.

We also dealt with several micro‑issues, such as premature call termination, incorrect end‑of‑utterance detection, and managing the timing between user speech and AI responses.

3.4 What We Built Ourselves

AI models are inherently non‑deterministic and cannot be fully controlled like classic software components. To ensure predictable and business‑aligned outcomes, we developed backend modules responsible for:

• Conversation flow supervision

• Session state tracking

• Monitoring and logging voice interactions

• Handling edge cases and ambiguous user inputs

ElevenLabs’ tooling, especially the Hard Disk service, significantly supported our workflow, but the orchestration layer was built entirely by Inero.

3.5 What We Learned

The most important insight: designing a telephony AI system requires deep understanding of the user’s context, combined with the business objectives of the project. Quick prototyping and iterative PoC testing were essential — allowing us to validate conversational patterns early, reveal unexpected user behavior, and refine the interaction design.

Ultimately, success depended on aligning the AI’s conversational style with how real customers naturally speak, pause, and respond during a phone call.

4. GDPR Considerations in AI Telephony

All audio handling, session storage, and logging were designed according to GDPR principles: strict data minimisation, no training on user audio, encrypted transmission, and optional anonymisation of transcriptions. Where possible, processing was routed through EU‑aligned infrastructure.

Conclusion

Implementing an AI‑driven telephony service

center requires more than connecting APIs — it requires understanding users,

managing nuanced conversational flows, and ensuring full compliance with EU

regulations. Our experience shows that LiveAPI technologies combined with

ElevenLabs can deliver highly human‑like, responsive, and scalable

communication channels for enterprise clients.