GPT – A DIFFERENT POINT OF VIEW

![]()

In the previous post, we looked at the possibilities of the GPT model in terms of supporting the work of a programmer. But that’s not the only thing this technology can do. GPT is a language model that utilizes a deep learning technique known as transformer. For example, this allows it to produce text that mimics human writing. It has been trained on an extensive collection of text found on the internet and can be adjusted for specific tasks such as language translation, text summarization and text generation. The model is multilingual and can respond to a broad spectrum of questions, commands and prompts. Additionally, it is capable of generating creative content like stories and poetry. It could be applied in various fields such as virtual assistants, chatbots and automated writing. However, it’s important to keep in mind that while it is highly advanced, GPT is not human and may not fully understand context of language nuances, thus it may produce errors or biases present in the training data.

In the previous post, we looked at the possibilities of the GPT model in terms of supporting the work of a programmer. But that’s not the only thing this technology can do. GPT is a language model that utilizes a deep learning technique known as transformer. For example, this allows it to produce text that mimics human writing. It has been trained on an extensive collection of text found on the internet and can be adjusted for specific tasks such as language translation, text summarization and text generation. The model is multilingual and can respond to a broad spectrum of questions, commands and prompts. Additionally, it is capable of generating creative content like stories and poetry. It could be applied in various fields such as virtual assistants, chatbots and automated writing. However, it’s important to keep in mind that while it is highly advanced, GPT is not human and may not fully understand context of language nuances, thus it may produce errors or biases present in the training data.

We asked ChatGPT question: “Describe your ability to summarize texts.”

Output: “As a language model, I am able to summarize documents by identifying and extracting the main points and condensing them into a shorter version while retaining the key information. I can summarize documents of various formats such as text, PDFs, and articles. However, my ability to summarize a document may depend on the length and complexity of the document, as well as the quality of my fine-tuning. Additionally, my summary may not always be entirely accurate, as I am not capable of understanding the context or nuances in the same way a human would, but I can provide a summary which is more readable, informative and precise based on the data I have been trained on.”

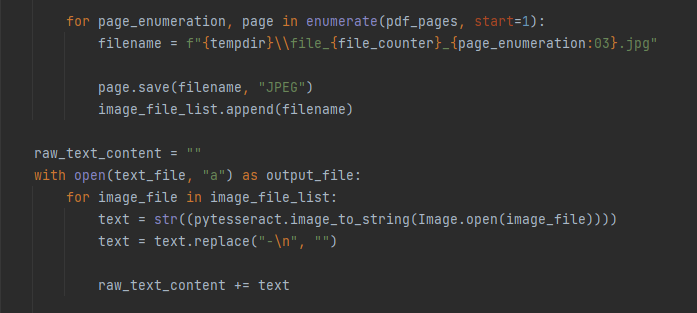

Based on this information, we will conduct an experiment to test the efficiency of davinci-003 model during analyzing some documents that were prepared by us especially for this purpose. However, if we want the model to be able to effectively analyze the content of PDFs documents, we should provide it in the form of a prompt. For this we will use OCR technology and pyTesseract.

![]()

PREPARING THE DATA AND THE CODE

USING OCR

OCR stands for Optical Character Recognition, which is a technology used to convert scanned images or PDFs of text into machine-readable text. pyTesseract is a Python wrapper for Google’s Tesseract-OCR Engine, which allows for easy integration of OCR capabilities into Python scripts. pyTesseract can be used to extract text from images, scanned documents, or PDFs. It is an open-source library, and it can be easily installed via pip.

INTEGRATION WITH AN API

The next step is to use endpoint prepared for handling text completions. We can interact with the API through HTTP requests from many languages, for example via official Python bindings, Node.js library, or a community-maintained library. This time we will make use of the first one. We can add it to the project simply using following command:

pip install openai

All requests should include our API key in an Authorization HTTP header. A key should be retrieved from API Keys page and used in every request.

openai.api_key = os.getenv(“OPENAI_API_KEY”)

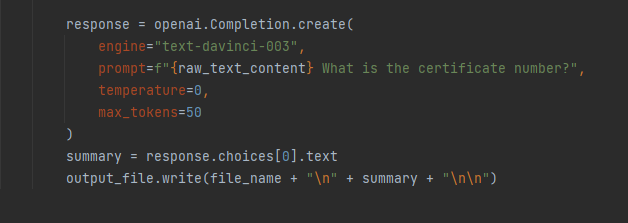

In case of summarizing input text we can use endpoint that creates a completion for the provided prompt and parameters.

POST https://api.openai.com/v1/completion

- model is the specific pre-trained language model that the OpenAI API will use to generate text.

- prompt is the input text that the API will use as a starting point for generating new text. This can be a sentence or a paragraph, and is used to provide context for the text generation.

- max_tokens is an integer value that specifies the maximum number of tokens (words or word pieces) that the API will generate in its response.

- temperature is a value that controls the “creativity” of the generated text. Lower values will produce text that is more conservative and similar to the input prompt, while higher values will produce text that is more varied and creative.

DATA PREPARATION

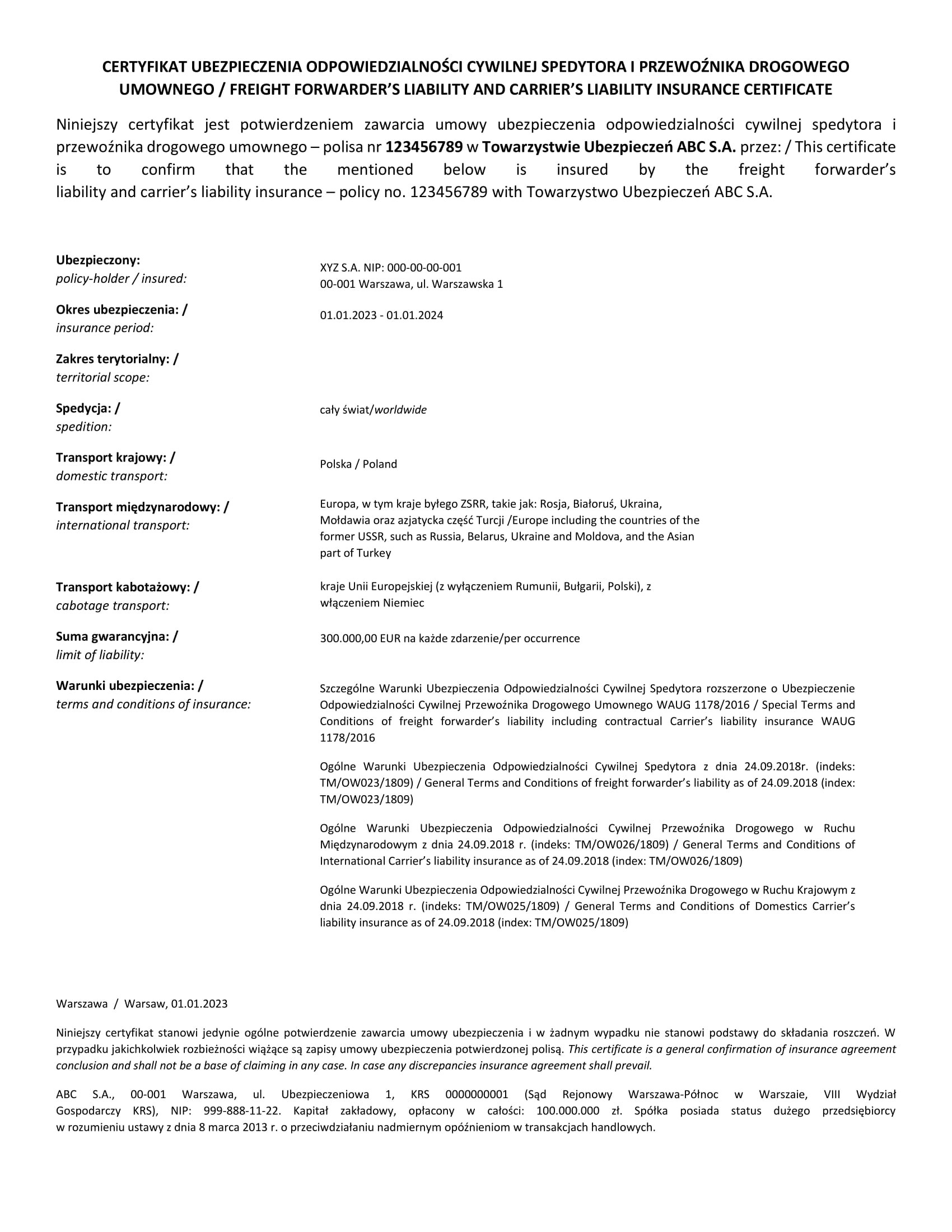

Now we will use some Carrier’s Liability Insurance certificates containing fake data.

Carrier’s liability insurance is a type of insurance coverage that protects a carrier, such as a shipping company, against claims made by the customer for loss or damage to the goods being transported. It provides financial protection to the carrier in the event that goods are damaged or lost while in transit.

Here we have the structure of these documents:

For the purposes of this experiment, we have prepared 6 variants of such a document, which are available for download HERE. They differ, for example, in the insured, the insurance period, the limit of liability, the insurer, and the territorial scope.

For each of the variants, 7 questions were asked to check how well the model deals with understanding the content.

- Who is the insured? Give me name and NIP number.

- Who is the insurer?

- What is the limit in general liability?

- What is the certificate number?

- What is the scope of international transport?

- Where this documents was issued?

- What is the insurance period?

Below are the answers:

OCP-01

The insured is XYZ S.A. with NIP: 000-00-00-001. The insurer is Towarzystwo Ubezpieczen ABC S.A. The limit in general liability is 300.000,00 EUR per occurrence. The certificate number is 123456789. The scope of international transport is Europe including the countries of the former USSR, such as Russia, Belarus, Ukraine and Moldova, and the Asian part of Turkey. This document was issued in Warsaw, Poland and the insurance period is from 01.01.2023 to 01.01.2024.

OCP-02

The insured is ZYX S.A. with NIP: 000-00-00-002. The insurer is Towarzystwo Ubezpieczen BCA S.A. The limit in general liability is 150.000,00 EUR per occurrence. The certificate number is 234567891. The scope of international transport is Europe including the countries of the former USSR, such as Russia, Belarus, Ukraine and Moldova, and the Asian part of Turkey. This document was issued in Warsaw, Poland and the insurance period is from 01.01.2024 to 01.01.2025.

OCP-03

The insured is YXZ S.A. with NIP: 000-00-00-003. The insurer is Towarzystwo Ubezpieczen CBA S.A. The limit in general liability is 420.000,00 EUR per occurrence. The certificate number is 345678912. The scope of international transport is Europe including the countries of the former USSR, such as Russia, Belarus, Ukraine and Moldova, and the Asian part of Turkey. This document was issued in Warsaw, Poland and the insurance period is from 01.06.2023 to 01.06.2024.

OCP-04

The insured is CCC S.A. with NIP: 000-00-00-004. The insurer is Towarzystwo Ubezpieczen VCX S.A. The limit in general liability is 500.000,00 EUR per occurrence. The certificate number is 456789123. The scope of international transport is Europe including the countries of the former USSR, such as Russia, Belarus, Ukraine and Moldova, and the Asian part of Turkey. This document was issued in Warsaw, Poland and the insurance period is from 01.02.2023 to 01.02.2024.

OCP-05

The insured is CCTA S.A. with NIP: 000-00-00-005. The insurer is Towarzystwo Ubezpieczen VVV S.A. The limit in general liability is 440.000,00 EUR per occurrence. The certificate number is 678912345. The scope of international transport is countries of the European Union (excluding Spain and Portugal), including Germany. This document was issued in Warsaw, Poland and the insurance period is from 02.04.2023 to 02.04.2024.

OCP-06

The insured is AVX S.A. with NIP: 000-00-00-005. The insurer is Towarzystwo Ubezpieczen HFX S.A. The limit in general liability is 750.000,00 EUR per occurrence. The certificate number is 567891234. The scope of international transport is countries of the European Union (excluding Romania, Bulgaria, Poland), including Germany. This document was issued in Warsaw, Poland and the insurance period is from 02.07.2023 to 02.07.2024.

![]()

SUMMARY

The model correctly interpreted the data contained in the documents, despite some potential issues related to OCR tool analyzing multi-column text. The model totally dealt with the text in Polish and correctly processed the information contained therein, despite the question written in English. Requests were processed separately, per document, but still consistency in text style was maintained. We can assume that in the near future such solutions will become helpful in broadly understood information processing.

The model correctly interpreted the data contained in the documents, despite some potential issues related to OCR tool analyzing multi-column text. The model totally dealt with the text in Polish and correctly processed the information contained therein, despite the question written in English. Requests were processed separately, per document, but still consistency in text style was maintained. We can assume that in the near future such solutions will become helpful in broadly understood information processing.