Retrieval-Augmented Generation (RAG) models are transforming the capabilities of intelligent assistants, enabling more accurate and context-aware responses to user queries. Unlike traditional large language models (LLMs), RAG-based systems integrate two essential components: a retrieval mechanism that fetches relevant documents and a generative model that synthesizes responses based on real-time data. This post explores how DeepEval helps systematically assess the effectiveness of both retrieval and generation components, ensuring more reliable machine-generated insights.

While RAG-enhanced virtual assistants significantly improve answer relevance, evaluating their performance remains a challenge. Since these models rely on both retrieval and text generation, a weak document-fetching step can lead to misleading or incorrect responses, even if the underlying LLM is highly advanced.

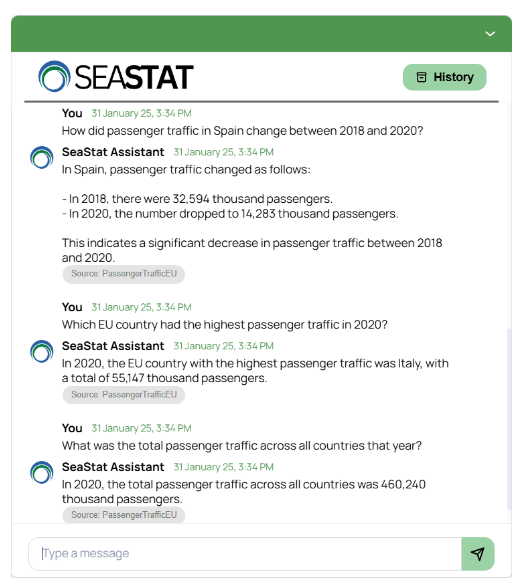

We’ll demonstrate this process using our custom AI-driven assistant, designed to answer complex queries about maritime economy statistics, showcasing how LLM-powered knowledge retrieval enhances data-driven decision-making.

SeaStat - Our AI Assistant

A great example that we can use to discuss this topic is the SeaStat AI Assistant developed by us as part of the Incone60 Green Project (https://www.incone60.eu/). The goal of the project is to improve the competitiveness and sustainable development of small seaports in the South Baltic region.

During Incone60 Gren Project we have developed an AI assistant that answers questions about maritime economy data, providing instant access to structured maritime economic insights. This assistant leverages a Retrieval-Augmented Generation (RAG) approach, ensuring that responses are grounded in a structured database covering key aspects such as seaports, maritime transport, shipbuilding, passenger traffic, trade, and the fishing industry.

Our AI assistant operates within a RAG pipeline that integrates:

- A structured maritime economy database, which includes global and Polish maritime statistics from 2017 to 2020. The data is sourced from publications by Gdynia Maritime University, which aggregate statistics from various government institutes, universities, and port enterprises. The database consists of 50 tables, covering key aspects of maritime transport and is planned to be further extended with additional years.

- Dynamic SQL generation to extract relevant information from the database.

- A generative LLM that formulates answers based on the retrieved data.

Building such an assistant requires several key decisions and parameter optimizations, including:

- Selecting the most suitable LLM model and tuning parameters (e.g., temperature).

- Designing an effective prompt structure.

- Ensuring the assistant consistently selects the most relevant tables from the dataset.

This is where automatic testing becomes crucial. It helps assess system performance, identify weaknesses, and ensure continuous improvement.

LLM-as-a-Judge: Automating RAG Model Evaluation

Evaluating systems that generate non-deterministic, open-ended text outputs can be challenging because there is often no single “correct” answer. While human evaluation is accurate, it can be costly and time-consuming.

LLM-as-a-Judge is a method that approximates human evaluation by rating the system’s output based on custom criteria tailored to your specific application. One such testing framework is DeepEval, which provides a set of metrics designed for both retrieval and generation tasks and allows you to create your own rating criteria.

Key evaluation metrics are:

- G-Eval: A versatile metric that evaluates LLM output based on custom-defined criteria.

- Answer Relevancy: Measures how well the model’s response addresses the user query.

- Faithfulness: Assesses how accurately the response aligns with the provided context, helping to limit hallucination in RAG systems.

- ContextualRecallMetric, ContextualPrecisionMetric, ContextualRelevancyMetric: These metrics are particularly useful for RAG systems, evaluating whether retrieval components return all relevant context while avoiding irrelevant information.

Step-by-Step RAG Model Testing with DeepEval

To ensure the reliability and accuracy of our Retrieval-Augmented Generation (RAG) model, we follow a structured evaluation approach. This process involves dataset creation, response generation, and model evaluation using DeepEval, allowing us to systematically assess the effectiveness of both retrieval and generation components. Let’s break down each step in detail.

1. Dataset Creation

To evaluate performance, we create a test set consisting of:

– Realistic questions that users might ask. These can range from simple fact-based queries to more complex, multi-step inquiries that require detailed answers drawn from multiple tables.

– Expected ground truth responses derived directly from the database.

2. Generating Model Responses

For each test query, the assistant generates an answer based on the relevant data retrieved from the database.

3. Evaluation using DeepEval

We are particularly focused on factual correctness for our assistant, so we use the G-Eval metric to evaluate this aspect.

We need to define G-Eval by describing testing criteria, e.g.:

correctness_metric = GEval(

name="Correctness",

evaluation_steps=[

"Assess whether the actual output is accurate in terms of facts compared to the expected output.",

"Penalize missing information."

],

evaluation_params=[

LLMTestCaseParams.INPUT,

LLMTestCaseParams.ACTUAL_OUTPUT,

LLMTestCaseParams.EXPECTED_OUTPUT

],

)

Additionally, we use several built-in metrics:

contextual_precision = ContextualPrecisionMetric()

contextual_recall = ContextualRecallMetric()

contextual_relevancy = ContextualRelevancyMetric()

answer_relevancy = AnswerRelevancyMetric()

faithfulness = FaithfulnessMetric()

We then define test cases:

test_case = LLMTestCase(

input=#user prompt,

actual_output=#model output here,

expected_output=#the ground truth response

retrieval_context=#data extracted by retriever, in our case it is data extracted from the database

)

Here is one of test cases we used to evaluate our SeaStat Assitant:

test_case = LLMTestCase(

input='Compare cargo traffic in Suez Canal and Panama Canal in 2019',

actual_output= 'In 2019, the cargo traffic data for the Suez Canal and Panama Canal was as follows: Suez Canal - 1031 million tons; Panama Canal - 243059 thousand tons. The Suez Canal had significantly higher cargo traffic compared to the Panama Canal in 2019.'

expected_output=' In 2019, the Suez Canal handled 1,031 million tons of cargo, whereas the Panama Canal transported only 243 million tons. This indicates that the Suez Canal carried a substantially higher volume of cargo than the Panama Canal that year.'

retrieval_context=[

{'table': 'Suez_Canal_Cargo_Traffic', 'year': 2019, 'cargo_volume_million_tons': 1031},

{'table': 'Panama_Canal_Cargo_Traffic', 'year': 2019, 'direction': 'Atlantic – Pacific', 'cargo_volume_thousand_tons': 156899}, {'table': 'Panama_Canal_Cargo_Traffic', 'year': 2019, 'direction': 'Pacific – Atlantic', 'cargo_volume_thousand_tons': 86160}

]

)

And run evaluation:

assert_test(test_case, [correctness_metric, answer_relevancy, contextual_precision, contextual_recall, contextual_relevancy, faithfulness])

4. Testing results

DeepEval assigns each metric a score between 0 and 1, accompanied by a descriptive explanation of the rating. Below are the results from a test case evaluating SeaStat’s response to the prompt:

“Compare cargo traffic in the Suez Canal and Panama Canal in 2019.”

Metric interpretations:

- Contextual Recall (1.0) – The retriever effectively retrieved the necessary information, meaning that almost all essential details from the expected output were present in the retrieval context.

- Contextual Relevancy (0.95) and Contextual Precision (1.0) – The retrieved context was highly relevant to the query, showing that the retriever pulled information accurately related to the input.

- Faithfulness (1.0) – The model’s response remained perfectly factual, strictly adhering to the retrieved information without introducing any hallucinations.

- Answer Relevancy (1.0) – The model’s response fully addressed the user query, ensuring that the answer was on point.

- Correctness, (0.78) – the correctness score was slightly lower due to numerical discrepancies caused by rounding.

By systematically analyzing test cases with DeepEval, we gain valuable insights into where our RAG model excels and where improvements are needed. Future optimizations could include refining retrieval strategies, adjusting prompt engineering, or fine-tuning LLM parameters for better factual accuracy.

Test case | Metric | Score | Status | Overall Success Rate |

test_case_0

| Correctness (GEval) | 0.78 (threshold=0.5, evaluation model=gpt-4o, reason=The actual output closely matches the expected output in terms of cargo volumes and comparative conclusion, but the numbers are expressed in different units (thousand tons vs million tons) and slightly differ, which may indicate rounding or conversion discrepancies., error=None) | PASSED | 100% |

Answer Relevancy | 1.0 (threshold=0.5, evaluation model=gpt-4o, reason=The score is 1.00 because the response thoroughly addressed the comparison of cargo traffic in the Suez Canal and the Panama Canal in 2019 with no irrelevant details included. It’s precise and to the point, showcasing a deep understanding of the topic., error=None) | PASSED | ||

Contextual Precision | 1.0 (threshold=0.5, evaluation model=gpt-4o, reason=The score is 1.00 because the relevant nodes, offering essential data for comparing cargo traffic in the Suez and Panama Canals in 2019, are perfectly ranked at the top. These nodes effectively deliver a comprehensive breakdown of cargo volumes through both canals during that year, ensuring accurate comparisons can be made efficiently., error=None)

| PASSED | ||

Contextual Recall | 1.0 (threshold=0.5, evaluation model=gpt-4o, reason=The score is 1.00 because every sentence in the expected output aligns perfectly with the data from the nodes in the retrieval context, effectively illustrating the significant difference in cargo volumes handled by both canals. Well done on maintaining precise and accurate attention to detail!, error=None)

| PASSED | ||

Contextual Relevancy | 0.95 (threshold=0.5, evaluation model=gpt-4o, reason=The score is 0.95 because although the context is rich with detailed data on Suez Canal cargo traffic, it lacks specific information on the Panama Canal’s cargo traffic, necessitating additional data for a complete comparison., error=None)

| PASSED | ||

Faithfulness | 1.0 (threshold=0.5, evaluation model=gpt-4o, reason=Awesome job! The score is 1.00 because there are no contradictions present, showcasing perfect alignment and faithfulness of the actual output to the retrieval context. Keep up the excellent work!, error=None)

| PASSED |

Evaluating Retrieval-Augmented Generation (RAG) models requires a structured approach to ensure both retrieval accuracy and response reliability. LLM-as-a-Judge provides an efficient alternative to human evaluation by systematically assessing outputs based on predefined criteria, enabling scalable and cost-effective validation.

Using DeepEval, we tested our AI-driven SeaStat Assistant against key evaluation metrics, including Correctness (G-Eval), Answer Relevancy, Contextual Precision, Contextual Recall, Contextual Relevancy, and Faithfulness. The results highlighted minor discrepancies in numerical representation, missing contextual details, and retrieval precision—insights crucial for refining model performance.

These findings emphasize that even high-performing RAG models require rigorous evaluation to ensure factual accuracy and prevent misleading outputs. By automating this process, we enable continuous model improvement, ensuring AI-driven assistants deliver reliable, context-aware insights at scale.

AI-powered assistants are undoubtedly a technology that will become an indispensable tool for employees at all levels—from executives and directors to specialists. Their dynamic development allows them to instantly adapt to business needs and evolving expectations.